The AI Compute Bottleneck: Centralized Cloud in the AI Revolution

Introduction: What’s stopping AI from major breakthroughs?

AI has been the major topic for the last three years. From astonishing Large Language Models (LLMs) that can write like humans to multimodal AI that understands images and sounds, and increasingly sophisticated AI agents, the capabilities are expanding excessively. But beneath this exciting surface lies a growing problem: a "compute bottleneck" that threatens to slow down the AI revolution. The current giants of computing power, the centralized cloud providers like AWS, Azure, and Google Cloud, are struggling to keep up with the insatiable demand for massive and flexible processing power.

The Insatiable Appetite of New-Generation AI

Today's most advanced AI models are real computational beasts. Just teaching a new, cutting-edge AI (like a powerful language model or one that understands both images and sounds) demands an incredible amount of processing power from computer chips we know as GPUs. What's more, this huge demand isn't just for "training" these models; even using them for everyday tasks – which we call "inference" – requires significant computational muscle.

Let's look at the numbers to really see how fast AI is growing. Experts predict that the demand for AI computing power will jump by a massive 12 times by 2028. To help you imagine that, think about trying to store millions of movies every single second by the year 2030 – that's how much new information is being created, and AI needs to work through a huge part of it. This super-fast growth means that even the biggest cloud companies today just can't keep up. For example, right now, there's only enough computing power to support less than 20% of what's needed for "open models," which are vital for everyone to create new and exciting AI tools.

Source: Statista

The Cracks in the Centralized Cloud Model

While centralized cloud providers have been instrumental in the early stages of AI development, their traditional model is showing significant limitations when faced with this new wave of AI:

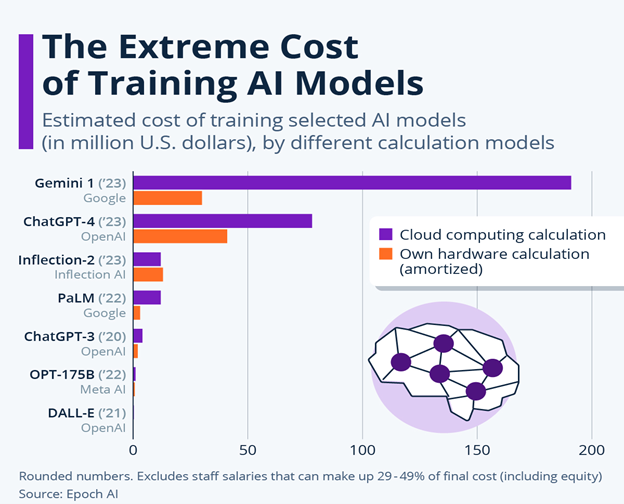

1. Sky-High Costs: Running large-scale AI models on centralized clouds can be incredibly expensive. The specialized hardware, like high-end GPUs, comes with a hefty price tag. For example, some top-tier GPU instances can cost upwards of $30,000 per month. Beyond the raw compute, there are often hidden fees for data transfer (egress fees) and storage, which can quickly spiral out of control, making AI adoption unaffordable for many businesses and researchers.

2. Closed Systems and Minimal Progress: Think about your smartphone. If you own an iPhone, you're deeply integrated into Apple's ecosystem – apps from their App Store, services like iCloud, etc. Switching to an Android phone, while possible, isn't always smooth. You might have to buy apps again, learn a new interface, and transfer your data carefully. Centralized cloud providers are similar. Once you build your AI using their specific tools and services, moving to another provider can be like switching phone ecosystems: it's a big hassle, costs time and money, and makes it hard to use different tools that might be better for you. This "lock-in" can really slow down new ideas because you're tied to one system.

3. Unsuitable for Mass AI Adoption: The current centralized model struggles with the demands of mass AI adoption. Imagine every small business, every researcher, every independent developer needing to access vast amounts of compute power. The current infrastructure, with its latency issues and limited availability in certain regions, simply isn't designed for this level of ubiquitous AI. Real-time AI applications, like those in autonomous vehicles or advanced fraud detection, require immediate responses that can be hindered by the physical distance between devices and centralized data centers.

What's Next for AI Compute?

This computing problem seems like a burden for innovations, but it's also making people come up with clever solutions. Because big, central computer systems can't keep up, the tech world is looking for new ways to spread out and share AI's computing power. This shift will be key to making AI available to everyone and truly unlocking the amazing potential of these new AI models.

Want to know more? Stay tuned to see how DECENTER combines AI, Decentralization, and Cloud Computing into an innovative ecosystem!

Follow us on X and join the movement.

👉 [LINK]